Completing Evaluations

Evaluations are a key component of your Quality Assurance program. They provide a consistent and structured way to measure performance, identify strengths and opportunities, and ensure that every customer interaction reflects your organization’s standards.

Before completing evaluations, make sure your scorecards and scoring models are already set up. These define what will be measured and how each interaction is scored.

To learn how to build a scorecard, see Building your Scorecard Structure.

To learn more about scoring methods, see Understanding Scoring Models.

Once your scorecards are complete and published, you can begin completing evaluations—either manually through a quality analyst or automatically through AI—to capture insights, measure consistency, and drive meaningful coaching discussions.

Begin Evaluation

To begin an evaluation, you must first select the agent you will be evaluating and the scorecard you want to use.

There are four ways to start a new evaluation and select both the agent and scorecard:

- Users – Start an evaluation directly from the user list by selecting an individual agent.

- Teams – Begin an evaluation from the team view to evaluate an agent within a specific team.

- Scorecards – Start an evaluation by choosing a specific scorecard first, then select the agent.

- Evaluations – Start an evaluation from the Evaluations page, either by repeating a previous evaluation using Evaluate Again or by creating a new one with + New Evaluation.

Users

To start an evaluation from the Users menu, go to the Organization section and select Users. Locate the user you want to evaluate, then click the Action icon at the end of their row and select Evalaute.

A session setup window will appear with the selected user already pre-populated. From there, choose the scorecard you want to use for the evalaution.

Teams

To start an evaluation from the Teams menu, go to the Organization section and select Teams. Locate the team you want to view, then click the Action icon at the end of the row and select View. This will display all users assigned to that team.

Find the agent you want to evaluate, click the Action icon at the end of their row, and select Evaluate. A session setup window will appear with the agent pre-populated. Choose the scorecard you want to use, just as you would when starting an evaluation directly from the Users menu.

Scorecards

To start an evaluation from a specific scorecard, go to the Quality menu and select Scorecards. This will display all available scorecards. Locate the form you want to use, then click the Action icon at the end of the row and select Evaluate.

An evaluation setup window will appear with the selected scorecard already pre-populated. You will then be prompted to choose the agent for the evaluation. A selection window will open where you can select only one agent to coach.

Evaluations

The final two ways to start an evaluation are from the Evaluations page.

- Evaluate Again

- Use this option to repeat a previous evaluation with the same agent and scorecard. Go to the Evaluations page, locate the completed evaluation you want to repeat, click the Action icon at the end of the row, and select Evaluate Again. A new evaluation will open with the same agent and scorecard pre-populated, allowing you to continue evaluating the agent.

- + New Evaluation

- You can also start a brand-new evaluation directly from the Evaluations page. Click the + New Evaluation button to open a new evaluation setup window. You will need to select both the agent and the scorecard you want to use before beginning the evaluation.

Completing an Evaluation

Evaluations can be completed in two ways: Manual or AI.

Each scorecard has two main sections—Meta Data and Scoring (or Audit)—and the process for completing these sections differs depending on the evaluation type.

Manual Evaluations

In a manual evaluation, the quality analyst completes all fields directly.

Meta Data fields are free-form inputs used to capture information that is not tied to a specific Yes/No or Selective response. These may include details such as call reason, unique ID, or other contextual information entered by the analyst.

The Scoring/Audit section contains the measurable criteria defined by the scorecard’s scoring model. Depending on the model, responses will be one of the following:

- Yes / No / N/A – for Weighted, Equal, and Audit models

- 0 / 1 / 2 / N/A – for Selective models

Each scoring or audit section also includes a + Notes option. Analysts can enter notes to provide context or specific feedback for each section. These notes appear on the final evaluation for both the manager and agent to review.

AI Evaluations

In an AI evaluation, both the Meta Data and Scoring/Audit sections are completed automatically by the AI.

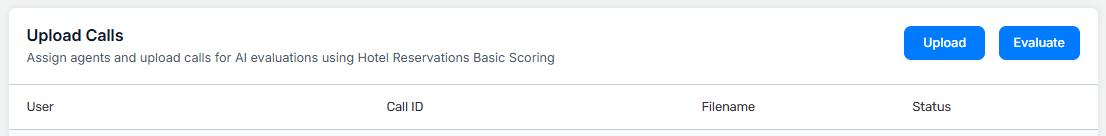

After selecting the agent and scorecard, the evaluator will be taken to the Upload Calls page. From there:

- Click Upload to upload one or more calls.

- Assign each uploaded call to the correct agent.

- Once all calls are uploaded and assigned, click Evaluate to begin the AI evaluation process.

The AI will transcribe each call into a readable transcript, then compare that transcript against the scorecard criteria using the AI scoring instructions. The evaluation will appear as Pending until the process is complete.

Coaching Session

Once either the manual or AI evaluation is completed, it is automatically sent to the evaluator or assigned manager for coaching.

The manager will see the entire evaluation, can complete the coaching session, and record commitments or feedback. The completed evaluation and coaching session will then appear on the agent’s dashboard for review.

Note: Evaluations using the Audit scoring model are for data collection only. They do not generate a coaching session or appear in the manager’s queue but remain accessible for reporting and trend analysis.